Introduction

Machine learning algorithms have had a lot of success in recent years, owing to their incredible intelligence in performing tasks. Through the input of previous data, the algorithm might appear to get smarter and more accurate at predicting results without being explicitly programmed. In the 1950s, the concepts "machine learning" and "artificial intelligence" first appeared.

In 1950, Alan Mathison Turing, a British logician, and computer science pioneer, published his seminal paper "Computing Machinery and Intelligence", in which he developed the “Turing Test” for assessing whether a machine is intelligent. The question "Can machines think?" is addressed in this paper. In this study, the subject "Can machines think?" is addressed. The idea is to make an observer converses with two counterparts, one a human and the other a machine, behind a locked door. Turing claims that if an observer cannot tell the difference between a human and a machine, the computer has passed the test and is intelligent. In 1951, Christopher Strachey built the first Intelligence artificial program (Strachey’s checkers (draughts)). This program was capable of playing a complete game of checkers at a good speed in 1952.

Artificial intelligence has progressed further. He develops the ability to recognize and respond to events in domains such as commerce recommendation systems, and medical, among others. Several algorithms have also emerged such as decision tree, Naive Bayes, support vector machine (SVM), Random Forest, k-nearest neighbor (KNN), neural network algorithms, etc. They are utilized in a variety of applications, including classification and regression.

The most significant machine learning method is the neural network, which is based on the biological brain of animals. It is made up of three layers: an input layer, an output layer, and a hidden layer in the center. This technique is then improved to solve more difficult problems. This enhancement entails the addition of hidden layers. We now have several hidden layers instead of a single hidden layer, which is called deep learning. Deep learning is a type of artificial neural network that has several hidden layers (2 or more).

In latest years, deep learning has encouraged researchers in making significant advances in the field of artificial intelligence. Supervised machine learning algorithms ( Supervised learning teaches models to produce the desired output using a training set which comprises inputs and correct outputs.), unsupervised machine learning algorithms (Unsupervised learning uses a training set of inputs with no correct outputs to educate models to create the desired output.), semi-supervised machine learning algorithms (semi-supervised learning teaches models to generate the intended result.), and reinforcement machine learning algorithms (reinforcement learning is a training strategy that rewards desirable actions while penalizing undesirable ones. A reinforcement learning agent can sense and comprehend its environment, act, and learn via trial and error in general.) are the four types of deep learning algorithms.

There are several Deep learning architectures such as Convolution Neural Network (CNN), Autoencoders, and Recurrent Neural Network (RNN) are used for classification (such as medical image classification, face detection, spam classification, etc.), segmentation (segmentation of satellite images, segmentation of medical images: of breast cancer, etc.), text classification (example: understanding the meaning of the text), etc.

One day in 2014, Ian Goodfellow and his colleagues

decided to launch a computer vision challenge with the goal of creating a

system that could create photos by itself.

As a result, they come up with the idea of a Generative Adversarial

Network, which is now the most impactful architecture.

In this article, we will try to answer these: what are Generative adversarial networks and how do they work? what are the applications of generative adversarial networks? what is the difference between GAN and convolution neuron network (CNN)?

What are Generative adversarial networks and how do they work?

GAN is a neural network architecture, it is the most popular one in the last years, according to Yann LeCun, are the most fascinating notion in Machine Learning in the last ten years.

It's made up of two neural networks. The generator neural network produces new data instances, while the discriminator neural network examines them for authenticity.

To further comprehend, imagine an apprentice chef taking an exam in which the subject is to create a new cuisine using the components specified by the master. Following the dinner preparation, the master will determine whether or not this meal should be included in the list of meals to be served in restaurants.

The generator model entails employing distribution to produce synthetic samples from random noise, while the discriminator acts as a controller, classifying the data generated as fake or real.

The generator's goal is to create artificial data that the discriminator identifies as real.

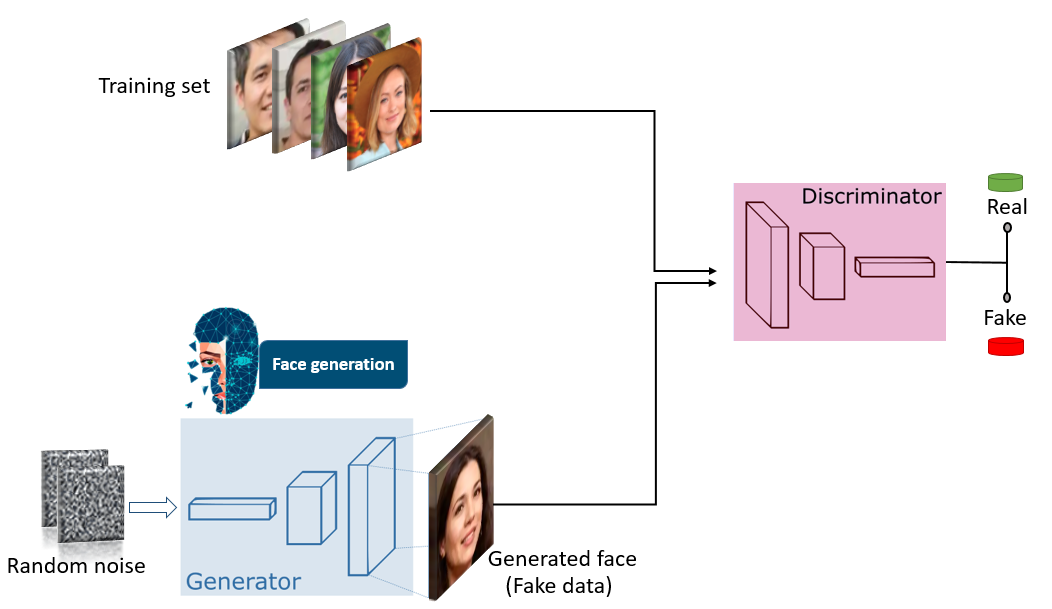

GAN architecture

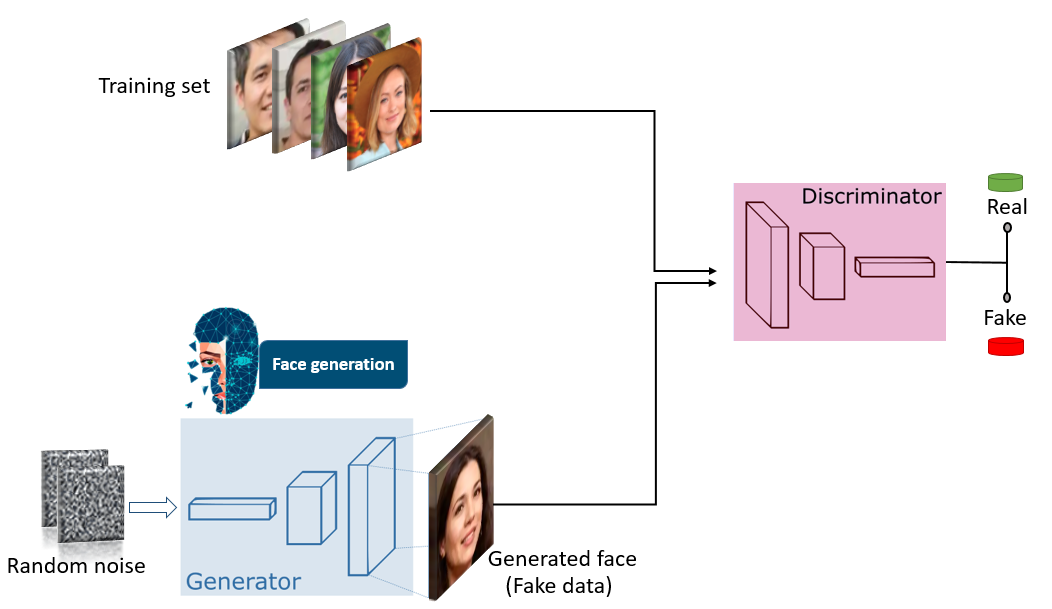

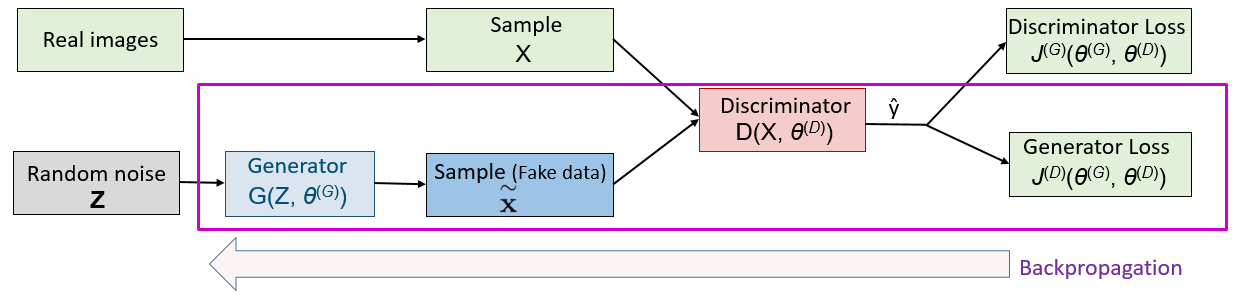

GAN architecture below includes:

- Random Input / Random noise: the input of the generative models. It is going to be utilized to build the synthetic data. This noise is converted into a useful result. We can get the GAN to create a wide range of data by introducing noise;

- Generator: is a neural network that will generate data (face image in our case) using the random noise;

- Training dataset: is made up of a huge number of samples. These samples will be used by the discriminator to compare the synthetic data with real data;

- Discriminator: is a type of classifier, which consists in distinguishing the real data from the data produced by the generator.

|

| Generative adversarial network architecture |

Let's take a closer look at the generator and discriminator

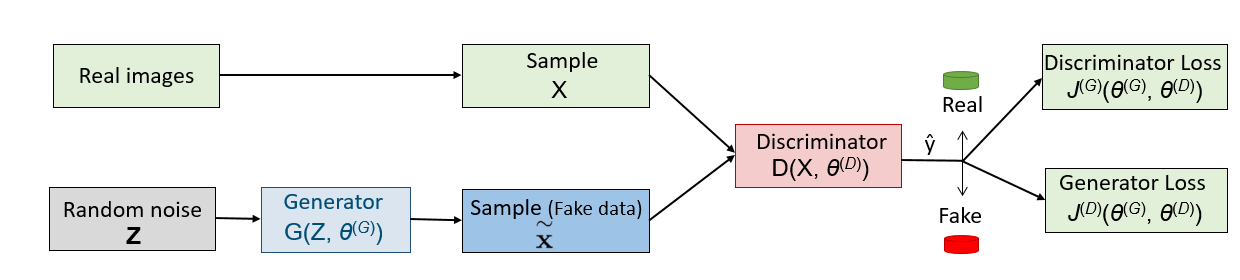

These models are the crux of the GAN model. At the same time, both GAN networks are being trained. The Generator (G) seeks to raise the amount of fake data classified as real data by the discriminator, while the Discriminator (D) aims to decrease the quantity of fake data classified as real data by the discriminator.

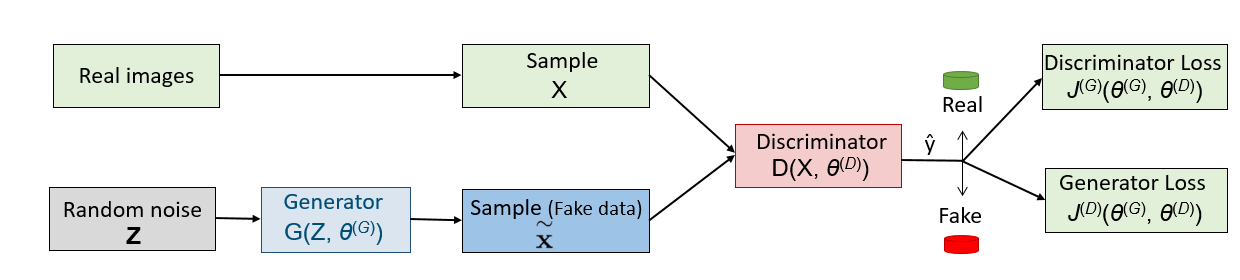

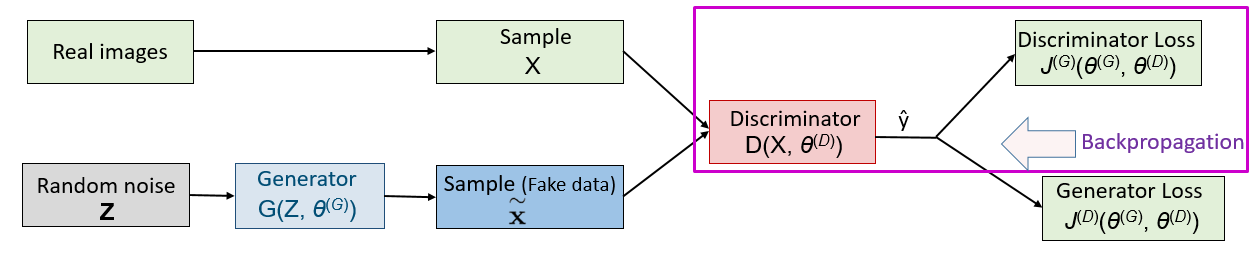

The generator, discriminator, and loss functions formula are shown in the figure below.

Where:

θ(G): generator parameter

θ(D): discriminant parameter

|

| Generative adversarial network architecture with functions |

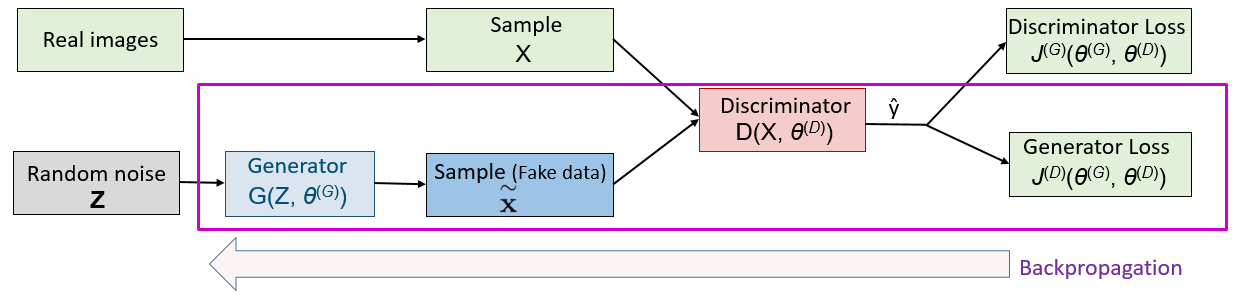

The generator training process:

It can any deep neural network architecture that is suitable for the application domain and capable to do the generating of synthetic data from the distribution of the training set.

The generator's training process begins with random noise. Random noise is used to generate the output. This data will be fed into the discriminator, which will classify it (as fake or real). It can measure the loss based on this classification, and its goal is to update the weight through backpropagation.

|

| Generator training process |

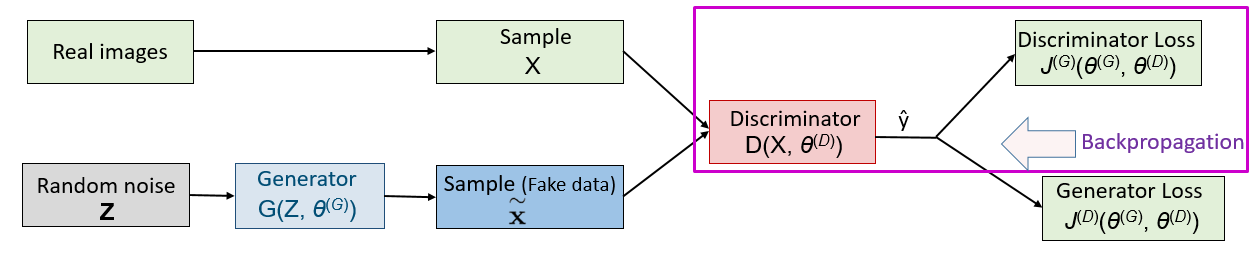

The discriminator training process:

The discriminator classifies both real and fake data throughout the training phase. A loss function discriminator can be used to regulate the classification. The loss function output will increase if the discriminator misclassifies a true sample as false or a false sample as true. It ignores the generator loss function and just utilizes its loss function (shown below). Finally, it adjusts the weights through backpropagation.

|

| Discriminator training process |

What are the applications of generative adversarial networks?

The most effective neural network in the field of machine learning after 2014 is the Generative Adversarial Network (GAN). This architecture is employed in a variety of sectors like,

Face Aging

Several researchers have used the GAN to simulate the Aging face, like, Grigory Antipov, et al. 2017 (Face aging with conditional generative adversarial networks).

There are a lot of similar apps available on the Play Store right now.

Generate Image Datasets

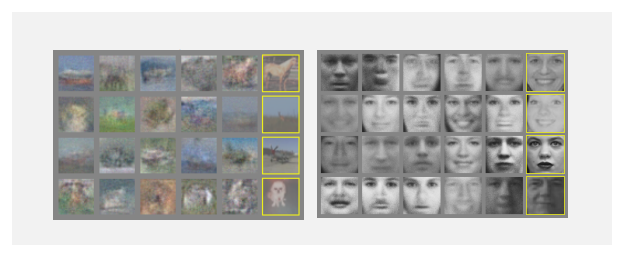

Ian Goodfellow's objective for 2014 is to generate images. He expressed this purpose in their original paper, which was the first time he introduced this model.

He put the suggested model to the test with a variety of image datasets, including the MNIST, CIFAR-10, and Toronto Face Database. GAN produces good results, as seen in the figure below.

|

| This image is taken from the original paper of Ian Goodfellow, et al., 2014 [source] |

Several researchers have used the generative adversarial neural network to produce images in diverse tasks, such as the development of face images, images of bedrooms, and so on, utilizing datasets such as Large-scale Scene Understanding (LSUN), Imagenet-1k, and so on.

Healthcare

We've all heard about the Coronavirus and how people scrambled to get the correct vaccination in a short amount of time. Let's begin with this concept! We can suggest the concept of drug and vaccine development. Using the most relevant dataset, GAN can create the proper drugs in a reasonable length of time.

Translating images

When we discuss GAN's applications, we fully understand the significance of this framework. GAN may be used to convert a nighttime image into a daytime image.

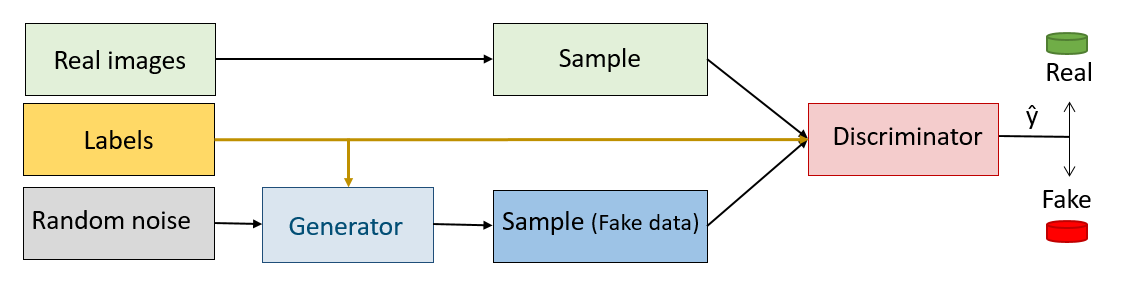

What is a Conditional GAN (cGAN), and how does it work?

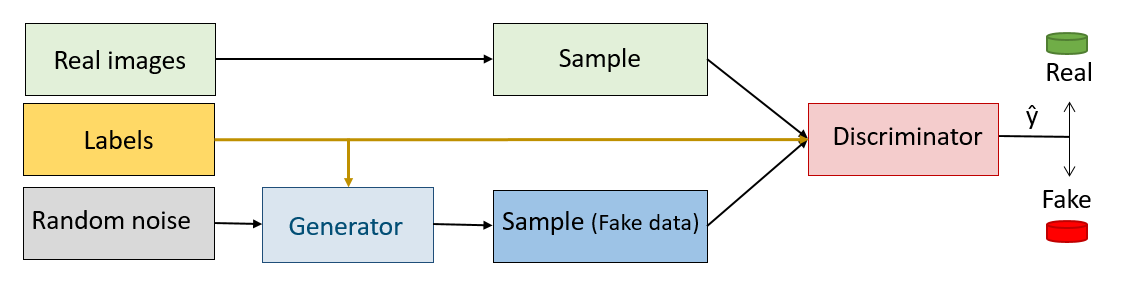

More data, like labels, may be added to GANs to help them create better images.

|

| How Conditional GAN (cGAN) works |

The purpose of conditional GAN is to improve the GAN by adding new data. Because of the richness of the input, the generator could be guided and the convergence process accelerated.

The noise and label from the previous input are combined in the generator so, it gains the ability to manage its output.

What is the difference between GAN and convolution neuron network (CNN)?

Convolutional neural networks are a sort of deep neural network design that is used to process spatial data such as images. It's made up of a lot of convolution and pooling layers which make it more capable to analyse images. Convolution neural network is used in classification, segmentation, object detection, recognizing a face.

=> CNN has a different use than GNN; it cannot replace GNN, but it may act as a discriminator in GNN, for example (in this instance CNN is included in GNN).

Summary

- Goodfellow created the generative adversarial neural network in 2014, and it has since become the most significant neural network today;

- The GAN consists of two neural networks: the generator and the discriminator;

- The generator generates fake data from random noise, and the discriminator classifies the generated data as fake or real;

- Conditional GAN (cGAN) is a GAN that uses labels as input data for the discriminator and generator in order to speed up and enhance convergence.

References

Paper :

Articles: